From CPUs to GPUs to TPUs

Applications like Google Home, Google Maps, Google Assistant are becoming part of our day to day life. They process huge amount of data and apply smart algorithms using Artificial Intelligence, Machine Learning and Deep Learning. In order to run these and many more such applications state of the art hardware is required that could offer fast processing of data in a fraction if nanoseconds or so. We know this hardware by the name of Central Processing Unit or CPU. Then there is Graphics Processing Unit or GPU specifically intended for display devices for image processing and graphical applications. In 2016, Google introduced a new hardware called Tensor Processing Unit or TPU for neural network machine learning applications. This article attempts to briefly discuss these three hardware - CPU, GPU & TPU.

CPU (Central Processing Unit): Commonly used processing units in desktop computers is CPU, and it performs fast arithmetic calculations. Modern day computers and smart phones etc. have many processors for different applications but early days desktop computers only had CPUs. CPU takes instructions in sequential manner and performs linear operations on data. These processors have very low latency, hence they make fast calculations. On the other hand they have low throughput due to the sequential processing of data.

Figure 1: Central Processing Unit (CPU)

GPU (Graphics Processing Unit): These processors are designed to perform high speed operations on parallel processes and were introduced in 1980s. Mobile phones, personal computers, gaming consoles use GPUs to give exceptional experience to users by resourcefully manoeuvring computer graphics and image processing techniques. GPUs are also used in Machine Learning applications that are based on conventional neural network algorithms. Nvidia is one of the pioneers in GPU chips and infact GPU was popularized by Nvidia only in 1999.

Figure 2: Graphics Processing Unit (GPU)

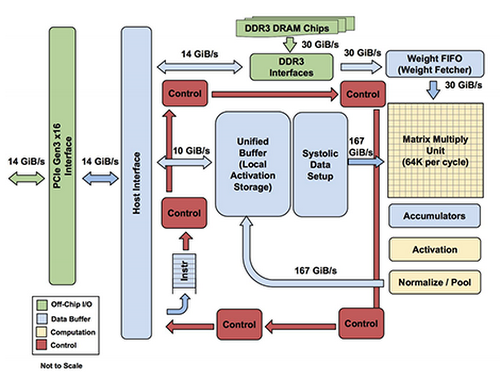

TPUs (Tensor Processing Units): TPUs are capable of processing thousands of matrix operations in a single clock cycle. The applications like Google maps, Google assistant use processing capability of TPUs for training the neural network, making fast computations and decision making. Influenced by Complex Instruction Set Computer (CSIC) based instruction set, TPUs deliver 15-30X better performance than CPUs and GPUs. Matrix Multiplication Unit (MXU), Unified Buffer (UB), Activation Unit (AU) are the additional computational resources present in TPUs as shown in the block diagram in Fig. 3.

Figure 3: Block Diagram of TPU

TPU is an Application-Specific Integrated Circuit (ASIC) chip built on 28nm process. Normally ASIC development takes several years but Google designed, developed and deployed TPU processors in just 15 months. Google is using TPU for quite some time now in its data centers for accelerating neural network computations. Neural Networks is going to dominate the world of computing and Google TPU could become an important part in delivering fast, smart and affordable services to users.

Prof. Lipika Gupta - Associate Professor (ECE), Chitkara University

References

- https://cloud.google.com/blog/products/gcp/an-in-depth-look-at-googles-first-tensor-processing-unit-tpu

- https://cloud.google.com/blog/products/gcp/google-supercharges-machine-learning-tasks-with-custom-chip

CLICK HERE to Rate the Article |